Muhammed Selim Korkutata | Anadolu | Getty images

During the two years and more that followed the generative artificial intelligence which stormed the world following the public liberation of Chatgpt, confidence was a perpetual problem.

Hallucinations, bad mathematics and cultural biases have afflicted results, reminding users that there is a limit to how much we can count on AI, at least for the moment.

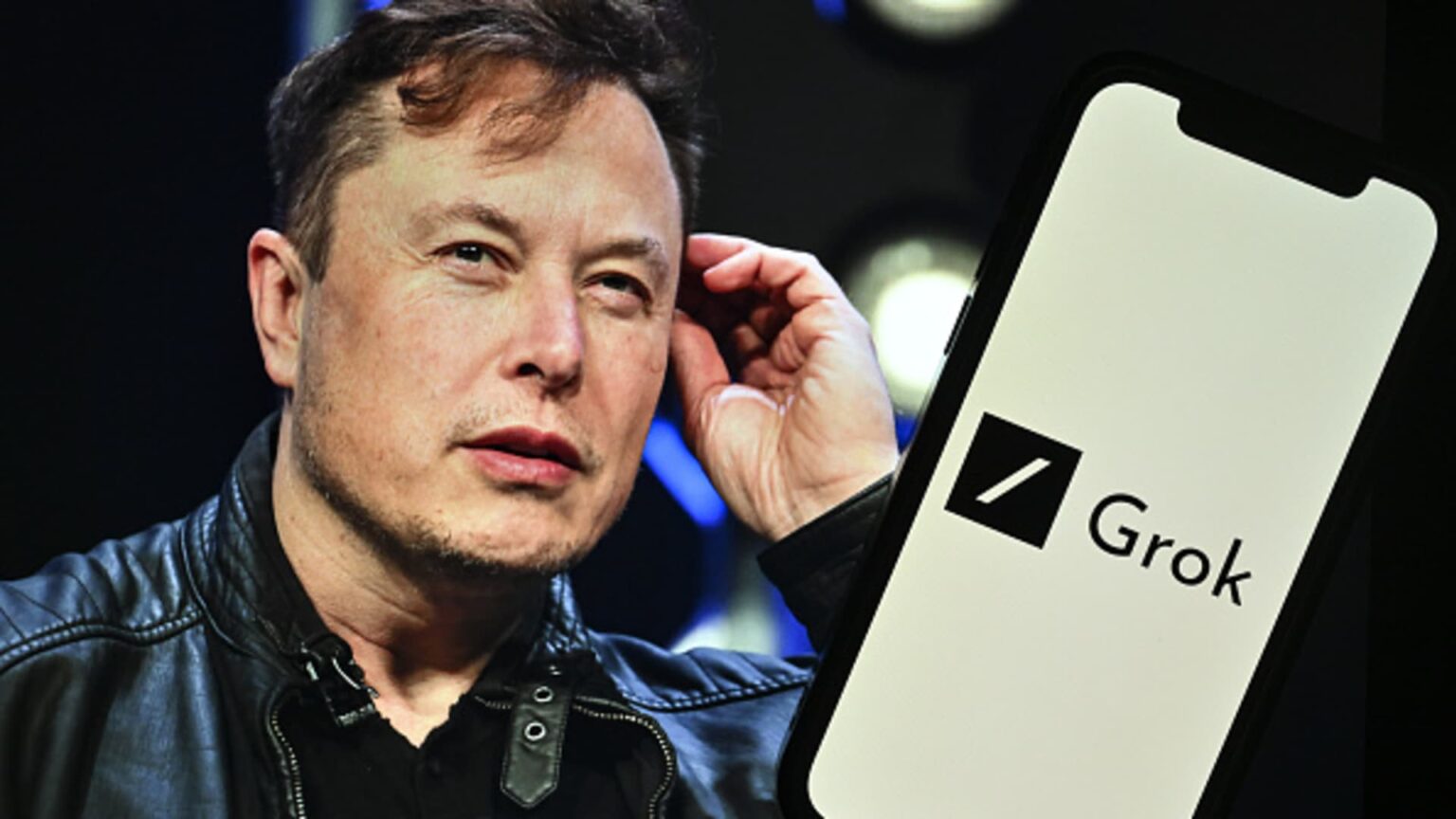

Elon Musk’s Grok chatbot, created by his startup Xai, has shown this week that there is a deeper reason for concern: AI can be easily manipulated by humans.

Grok began on Wednesday responding to user requests with false allegations of “white genocide” in South Africa. At the end of the day, screenshots were published on X of similar answers even when the questions had nothing to do with the subject.

After being silent on the issue for more than 24 hours, XAI said late Thursday that Grok’s strange behavior had been caused by an “unauthorized modification” to the so-called guests of the cat application system, which helps to inform the way it behaves and interacts with users. In other words, humans dictated AI’s response.

The nature of the answer, in this case, is directly linked to Musk, which was born and grew up in South Africa. Musk, who has Xai in addition to his CEO roles at Tesla And SpaceX, promoted the false affirmation that violence against certain South African farmers is a “white genocide”, a feeling that President Donald Trump also expressed.

“I think it is incredibly important because of the content and which leads this business, and the ways it suggests or highlights the type of power of which these tools have to shape the thought and understanding of the people of the world,” said Deirdre Mulligan, professor at the University of California in Berkeley and IA governance expert.

Mulligan characterized Grok’s assurance as an “algorithmic breakdown” which “separates the supposed neutral nature from the large language models. She said there was no reason to see the grok dysfunction as a simple “exception”.

Chatbots fed by AI created by Meta,, Google And Openai does not “pack” the information neutrally, but rather pass from data through a “set of filters and values integrated into the system,” said Mulligan. Grok ventilation offers a window on the ease with which one of these systems can be changed to respond to the agenda of an individual or a group.

The representatives of Xai, Google and Openai did not respond to requests for comments. Meta refused to comment.

Different of the past problems

Grok’s unauthorized alteration said XAI said in his statementviolated “internal policies and fundamental values”. The company said that it would take measures to prevent similar disasters and publish invites from the application system in order to “strengthen your grok confidence as a truth research IA”.

This is not the first IA error to become viral online. Ten years ago, Google’s photo application labeled African-Americans and gorillas badly. Last year, Google temporarily interrupted its gemini AI image generation functionality after admitting that it offered “inaccuracies” in historical images. And some Openai Openai users have been accused by some users of having shown signs of biases in 2022, bringing the company to announce that it implemented a new technique so that the images “precisely reflect the diversity of the world’s population”.

In 2023, 58% of AI decision-makers in Australia, the United Kingdom and the United States expressed concern about the risk of hallucinations in AI generative deployment, Forrester revealed. The survey in September of the same year included 258 respondents.

Experts told CNBC that Grok’s incident recalls the Deep, China’s deep, which has become a night feeling in the United States earlier this year due to the quality of its new model and that it would have been built on a fraction of the cost of its American rivals.

Critics said that the subjects of deep censorships deemed sensitive to the Chinese government. Like China with Deepseek, Musk seems to influence results according to its political opinions, they say.

When Xai made his debut on Grok in November 2023, Musk said he was supposed to have “a little spirit”, “a rebellious sequence” and to answer the “spicy questions” that the competitors could dodge. In February, XAI blame An engineer for changes that deleted Grok’s answers to user questions about disinformation, keeping the names of Musk and Trump.

But Grok’s recent obsession for “white genocide” in South Africa is more extreme.

Petar Tsankov, CEO of the AU model audit firm, Latticeflow AI, said that Grok blowing is more surprising than what we have seen with Deepseek because we would expect that there is a kind of manipulation of China “.

Tsankov, whose company is based in Switzerland, said that industry needs more transparency so that users can better understand how companies build and form their models and how it influences behavior. He noted that EU’s efforts require more technological companies to ensure transparency in the context of broader regulations in the region.

Without a public outcry, “we can never deploy safer models,” said Tsankov, and it will be “people who will pay the price” to trust the companies that develop them.

Mike GUALTIERI, analyst at Forrester, said that Grok’s debacle would not be likely to slow the growth of users for chatbots, or to reduce investments that companies pour into technology. He said that users have a certain level of acceptance for this type of occurrences.

“Whether Grok, Chatgpt or Gemini – everyone is waiting for him now,” said Gualtieri. “They were told how models hallucinate. There is an expectation that it will happen.”

Olivia Gambelin, AI ethicist and author of the book responsible for AI, published last year, said that if this type of Grok activity may not be surprising, it highlights a fundamental defect in AI models.

Gambelin said that “shows that it is possible, at least with the Grok models, to adjust these fundamental models for general use at will”.

– Lora Kolodny and Salvador Rodriguez of CNBC contributed to this report

WATCH: The XAI Grok chatbot from Elon Musk raises the South African affirmations of the “white genocide”.