Last month, Meta, his very first generative conference of AI. But although the event has made significant improvements for developers, it also seemed a little disappointing given the importance of AI for the company. Now we know a little more about why, thanks to a In The Wall Street Journal.

According to the report, Meta initially intended to publish her model “Behemoth” Llama 4 during the developer event in April, but then delayed its release in June. Now it has apparently been postponed, potentially until “falling or later”. The Meta Engineers would “find it difficult to significantly improve the capacity” of the model that Mark Zuckerberg called “the most efficient basic model in the world”.

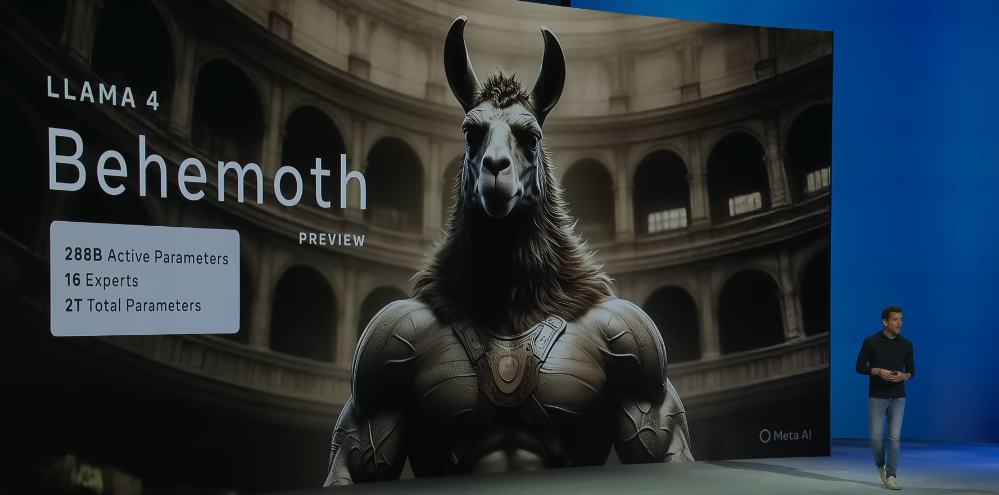

Meta has already published two small Llama 4 models, and has also teased a fourth light model which is apparently nicknamed “Little Llama”. Meanwhile, the “Behemoth” model will have 288 billion active parameters and “surpasses GPT-4.5, Claude Sonnet 3.7 and Gemini 2.0 Pro on several STEM references”, declared the company .

Meta has never given a firm calendar to know when to expect the model. The company said last month that it was “still in training”. And although Behemoth obtained a few head sewers during the opening speech of Llamacon, there was no update at the moment when he could be ready. It is probably because it could still be several months. Within the meta, there are apparently questions “on the question of whether the improvements in relation to previous versions are important enough to justify the public release”.

Meta did not immediately respond to a request for comments. As the report notes, this would not be the first company to meet snags while it rushes to publish new models and exceed competitors. But the delay is always notable given the high ambitions of the meta with regard to AI. Zuckerberg has done AI with a meta that plans to spend as much as on its IA infrastructure this year.